Development of an Image Generation Module via Diffusion Models

Exploring, implementing and comparing different Diffusion Models for image generation.

WARNING

This page was translated with AI. The content may contain small errors. Images aren't translated.

Abstract

This work presents the development of a Python software package for image generation via diffusion models. Diffusion models represent an emerging class of generative models that have demonstrated exceptional results in creating high-quality images, surpassing in many aspects previous techniques like Generative Adversarial Networks (GANs).

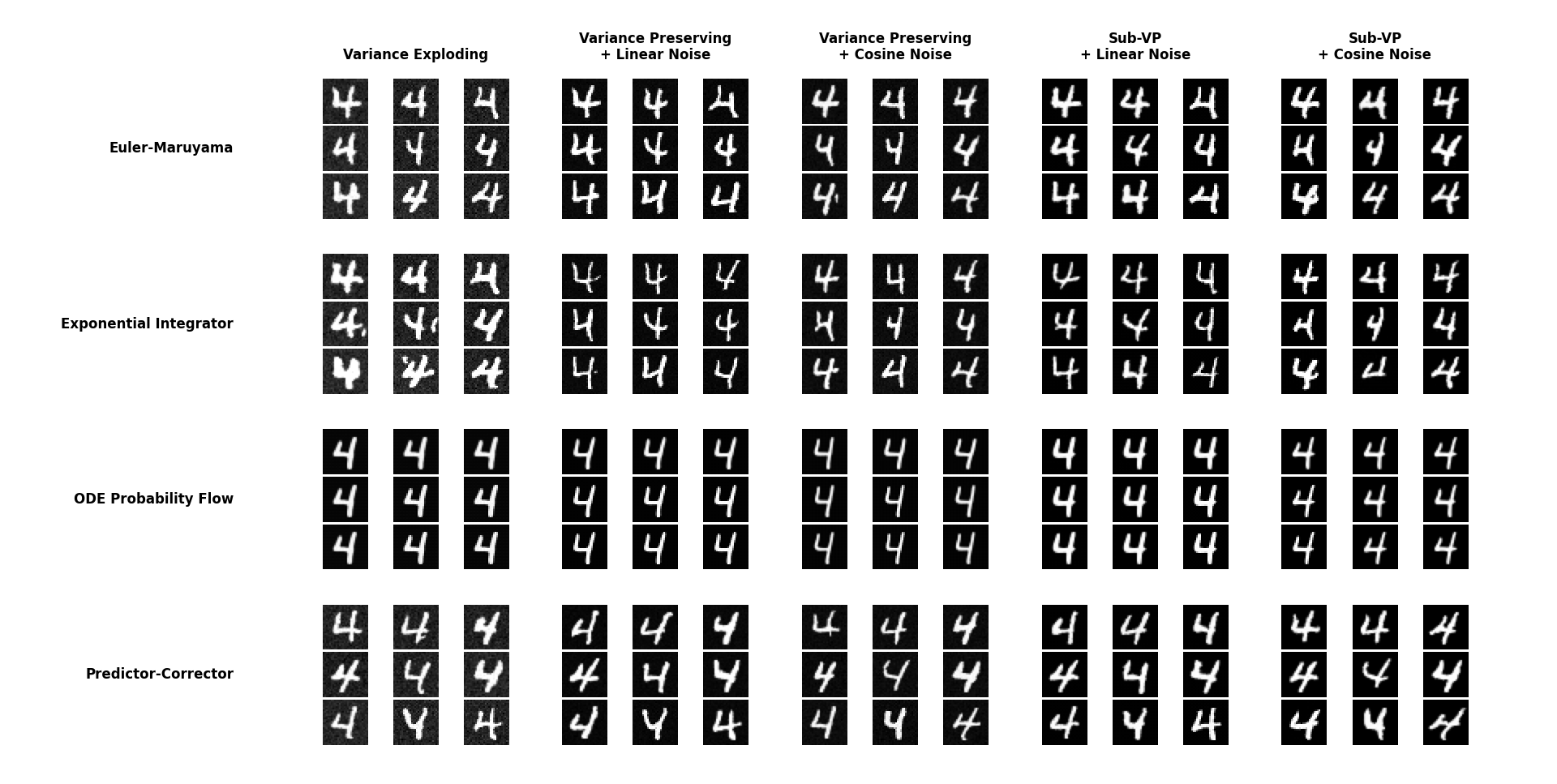

Our package implements three main variants of diffusion processes: Variance Exploding (VE), Variance Preserving (VP), and Sub-Variance Preserving (Sub-VP), each with distinctive characteristics for different use cases. For the sampling process, we provide four implementations: Euler-Maruyama, Predictor-Corrector, Probability Flow ODE, and Exponential Integrator, allowing for a balance between speed and quality according to specific requirements. Complementarily, we include two noise schedulers (linear and cosine) that control the addition of noise during the diffusion process.

A notable feature of the package is its capacity for controllable generation, including grayscale image colorization, imputation of missing regions, and class-conditioned generation. These functionalities significantly expand the scope of practical applications, from image restoration to the creation of specific content.

To ensure usability, we designed an intuitive programming interface complemented by an interactive dashboard, facilitating both programmatic use and visual experimentation. The package also includes standard metrics (BPD, FID, IS) for the quantitative evaluation of results.

The experiments performed demonstrate the effectiveness of our implementation in various generative tasks, producing high-quality images even with models trained on limited datasets. The code is structured in a modular and extensible way, facilitating the incorporation of new functionalities and adaptation to specific use cases.

The system includes innovative tools for secure serialization, an interactive dashboard, and auto-generated documentation, exceeding basic requirements.

1. Introduction

Image generation via machine learning represents one of the most fascinating and rapidly developing fields within artificial intelligence. In recent years, we have witnessed significant advances that have transformed our ability to create high-quality visual content automatically.

In this context, diffusion models have emerged as a particularly promising paradigm, offering substantial advantages over previous approaches like Generative Adversarial Networks (GANs). Their theoretical foundation in well-established stochastic processes provides an elegant framework for image generation, with more stable training and high-fidelity results.

This project focuses on the development of a Python software package for diffusion-based image generation, which implements different variants of these processes and provides flexibility regarding sampling methods, noise scheduling, and controllable generation tasks. Our implementation allows not only for generating images from random noise but also for more specific tasks like colorizing grayscale images, imputing missing regions, and class-conditioned generation.

The package has been designed with an emphasis on modularity and extensibility, allowing users to adapt each component according to their specific needs. Furthermore, it includes commonly used evaluation metrics in the field to facilitate comparison between different configurations and with other generation methods.

Motivation

The main motivation for developing this package stems from the need for accessible and flexible tools for the research and application of diffusion models. While there are reference implementations for specific models, we consider it valuable to provide a library that allows for experimenting with different configurations and systematically comparing their performance.

Moreover, the incorporation of controllable generation capabilities responds to the growing demand for methods that allow for greater control over the generative process, facilitating its application in contexts where certain constraints or specific characteristics in the generated images must be respected.

Another key motivation is facilitating research with custom components, simplifying the process of sharing models between teams. Our system serializes both trained parameters and the necessary class definitions, eliminating the need to manually share source code or maintain exact dependencies between environments. To ensure security, we implement an optional loading mechanism that requires explicit user confirmation and executes the code in a restricted environment, thus allowing collaboration without compromising system security.

Objectives

The main objectives of this project are:

- Develop a modular and extensible library for image generation via diffusion models.

- Implement different variants of diffusion processes, sampling methods, and noise schedules.

- Incorporate functionalities for controllable generation, including colorization, imputation, and class conditioning.

- Provide standard metrics for evaluating the quality of generated images.

- Create an interactive user interface to facilitate experimentation with the different components of the package.

- Thoroughly document both the code and the underlying theoretical foundations.

- Implement a secure serialization system for loading and saving custom classes.

Document Structure

The remainder of this document is organized as follows:

Chapter Development presents the software development, including work planning, requirements analysis, package design, and testing performed.

Chapter Results describes the results obtained, including examples of package usage and the project conclusions.

Finally, several appendices are included with additional technical material, complementary examples, and exhaustive comparisons between different package configurations.

1.1. State of the Art

The field of image generation via machine learning techniques has advanced significantly in recent years, with diffusion models standing out. These models have proven to be a promising alternative to Generative Adversarial Networks (GANs) and autoregressive models, offering high-quality samples with more stable training.

Diffusion Models

Diffusion models are based on a process that gradually adds noise to data and then learns to reverse this process. This approach provides a theoretically grounded framework in Stochastic Differential Equations (SDEs), where the process of adding noise defines a trajectory from the original data to pure noise, and the reverse process allows for generating new samples.

Among the most notable implementations is Stable Diffusion, which operates in a compressed latent space rather than the full pixel space, significantly reducing computational requirements. This approach allows for generating high-resolution images while maintaining training stability, using a Variational Autoencoder (VAE) to compress and decompress the representation before and after the diffusion process.

Colorization without Retraining

A notable advance in the field is CGDiff (Color-Guided Diffusion), a method that allows for colorizing grayscale images without the need to retrain the model. This technique is based on manipulating the latent space of the diffusion model, guiding the generation process to preserve the structural information of the original image while adding plausible chromatic information.

The CGDiff approach is particularly interesting because it demonstrates the flexibility of diffusion models for conditional generation tasks, leveraging the knowledge already acquired by the model about the distribution of natural images.

Trends in Image Imputation

Image imputation, also known as inpainting, seeks to complete missing regions coherently with the surrounding content. The most recent diffusion-based techniques have surpassed previous methods thanks to their ability to generate more coherent and detailed completions.

The current state of research focuses on guided sampling techniques, where additional information is used to condition the generation process, allowing for precise control over the generated content. These techniques allow for preserving specific details while completing missing regions, maintaining the global coherence of the image.

Text-to-Image Generation

Although not the main focus of our project, we cannot fail to mention advances in text-to-image models like DALL-E 2 and Imagen, which have demonstrated the capability of diffusion models to generate detailed images from textual descriptions. These models incorporate text representations learned by models like CLIP or T5, which allow aligning the semantic space of text with the visual space of images.

The relevance of these advances for our work lies in the conditioning techniques they employ, which in many cases are generalizable to other types of conditioning, such as class-based generation implemented in our project.

2. Development

This chapter details the development process of the software package for image generation via diffusion models. It addresses the different phases of development, from initial planning to validation and testing, including requirements analysis and system architecture design.

Development has been carried out following an iterative and incremental approach, prioritizing code modularity and extensibility. This has allowed for progressively implementing the different functionalities and system components, facilitating continuous integration and unit testing.

The methodology employed has placed special emphasis on code quality, thorough documentation, and ease of use, both for end users and for developers wishing to extend or modify the system. Throughout the process, version control tools, automated testing, and integrated documentation have been used to ensure software robustness and maintainability.

The following sections describe in detail each aspect of the development, from planning to validation, including considerations about software quality assurance and other relevant issues.

2.1. Work Planning

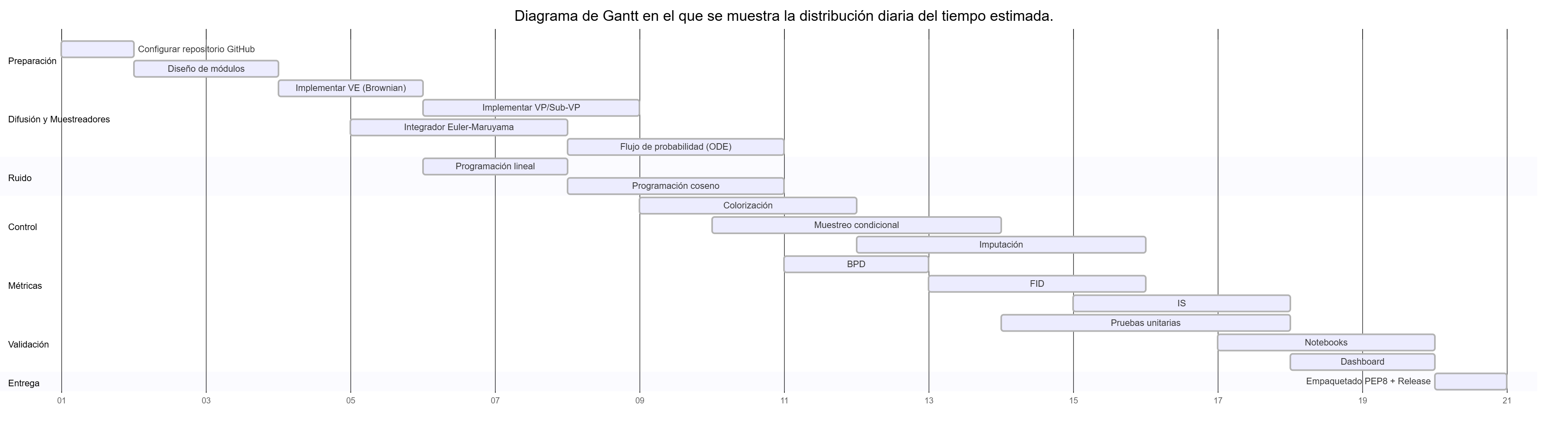

To carry out the work, we planned and divided the tasks equitably with the support of a Gantt chart to approximately follow the deadlines, taking into account that for logistical reasons we have less time than scheduled to complete it.

Although this was our original idea, we ultimately could not meet the established deadlines, and it was necessary to use the 50 days granted for the project’s completion. While most classes were finished according to the diagram, the extra work, as well as adjusting default parameters and bug fixes, took much longer than expected.

2.2. Requirements Analysis

2.2.1. Functional Requirements

The software must provide the following functionalities:

- RF-1. Allow image generation via diffusion models.

- RF-1.1. Support generating images from random noise.

- RF-1.2. Facilitate imputation of missing regions in partial images.

- RF-1.3. Include the option for generation conditioned to a specific class.

- RF-2. Offer configurations to control the parameters of the generative process.

- RF-3. Support different sampling methods for image generation.

- RF-4. Include metrics to evaluate the quality of generated images.

- RF-5. Provide an easy-to-use interface via a Python API.

- RF-6. Allow integration with Jupyter notebooks for demonstrations and testing.

2.2.2. Non-Functional Requirements

These are the constraints to which the software is subject:

- RNF-1. The generation time for a pixel image must not exceed:

- RNF-1.1. 5 seconds when running on a GPU with CUDA.

- RNF-1.2. 10 seconds when running on a CPU with at least 4 cores.

- RNF-2. The maximum RAM consumption must not exceed 8 GB during standard model execution.

- RNF-3. The code must follow Google’s style guides to ensure clarity and maintainability.

- RNF-4. The documentation must include usage examples to facilitate software adoption.

- RNF-5. The system must be compatible with Python 3.9 or higher and use standard machine learning libraries, including PyTorch 2.0.0.

2.2.3. Use Cases

The use cases describe how a user would interact with the system:

- CU-1. Generation of images from random noise: The user configures parameters such as size and sampling method. The system generates the image and returns it in a compatible format (png, jpg).

- CU-2. Imputation of missing regions in partial images: The user provides an image with missing areas. The system completes them based on the surrounding content and returns the reconstructed image.

- CU-3. Generation of images conditioned to a class: The user selects a category (e.g., “dog” or “cat”). The system generates the corresponding image and returns it in a compatible format.

2.3. Design

The design of the Python module has been done with a focus on extensibility and modularity, following object-oriented design principles and interface programming. The system architecture is based on abstract classes that define interfaces, along with concrete implementations that provide specific behaviors.

This structure facilitates both basic package usage for users with standard needs, and extension and customization for advanced users requiring specific behaviors. An example of this approach can be seen in the ‘samplers.ipynb’ notebook, which demonstrates how to use and combine different sampler implementations.

Design Patterns

Several design patterns have been applied to improve the structure and flexibility of the code:

- Strategy Pattern: Used in the different diffusers, samplers, and noise schedulers, allowing components to be easily swapped.

- Factory Pattern: Implemented in the

GenerativeModelclass to create instances of the different components based on configuration parameters. - Observer Pattern: Used for tracking progress during image generation.

Modular Architecture

The system architecture has been designed to maximize cohesion within each module and minimize coupling between modules. Each component has a clear and well-defined responsibility:

- Diffusion Module: Encapsulates the algorithms that define how noise is added and removed during the diffusion process.

- Sampling Module: Implements different strategies for numerically solving the stochastic differential equations of the diffusion process.

- Noise Scheduling Module: Defines how noise is distributed throughout the diffusion process.

- Metrics Module: Provides tools for evaluating the quality of generated images.

User Interface

The package provides two main interfaces:

- Programmatic API: A Python interface that allows access to all package functionalities through code.

- Interactive Dashboard: A Streamlit-based graphical interface that facilitates experimentation and demonstration of system capabilities without the need to write code. In addition to the local version, an online version (without GPU) can be accessed via https://image-gen-htd.streamlit.app/.

The dashboard represents a significant contribution to the system’s usability, allowing users with different levels of technical experience to interact with diffusion models in an intuitive way.

Extension System

A distinctive feature of the design is the dynamic loading system for custom classes, which allows users to extend system behavior without modifying the base code. This functionality is implemented through the CustomClassWrapper class, which provides secure mechanisms for loading and executing user-defined code.

This class is managed internally by the system, and users can load models with custom classes as if they were loading a normal one, improving the user experience and allowing for greater flexibility in system customization.

2.3.1. Package Structure

Regarding the file structure, the module code has been distributed into subfolders as follows:

diffusion/

|-- base.py

|-- ve.py

|-- vp.py

|-- sub_vp.py

metrics/

|-- base.py

|-- bpd.py

|-- fid.py

|-- inception.py

noise/

|-- base.py

|-- linear.py

|-- cosine.py

samplers/

|-- base.py

|-- euler_maruyama.py

|-- exponential.py

|-- ode.py

|-- predictor_corrector.py

base.py

score_model.py

visualization.py

utils.pyAdditionally, the project includes code for generating an interactive dashboard, tests, documentation, and an additional folder containing example notebooks. The complete code structure would be as follows:

dashboard/

|-- (styles and languages)

.streamlit/

|-- config.toml

dashboard.py

examples/

|-- class_conditioning.ipynb

|-- colorization.ipynb

|-- diffusers.ipynb

|-- evaluation.ipynb

|-- getting_started.ipynb

|-- imputation.ipynb

|-- noise_schedulers.ipynb

|-- samplers.ipynb

docs/

|-- (markdown with documentation)

tests/

|-- (various tests)2.3.2. Class Diagram

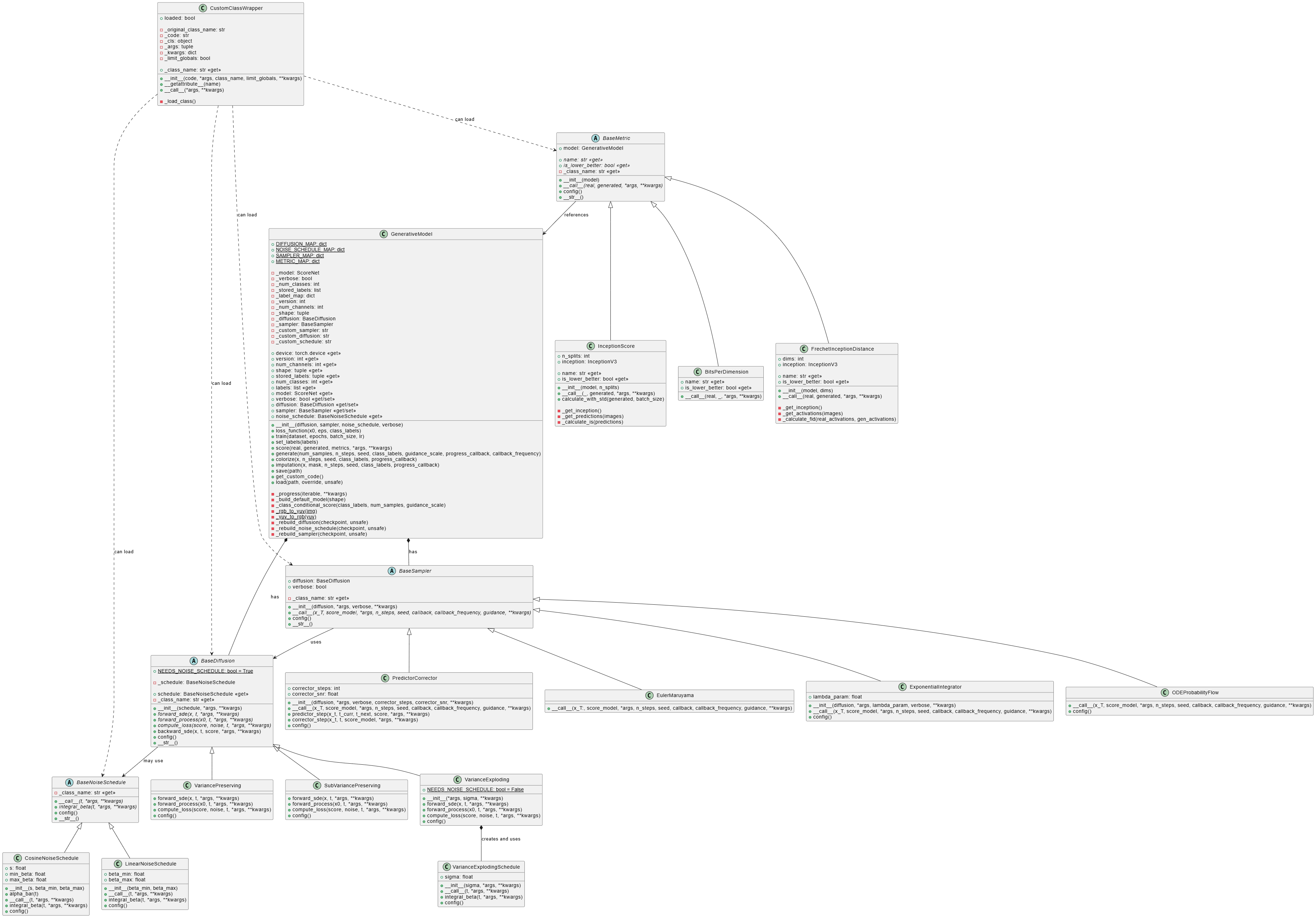

Our package’s architecture is designed following object-oriented programming and modular design principles. The class diagram presented in Figure Class diagram of the image generation package shows the relationships between the main components of the system.

The diagram illustrates the hierarchical structure of the classes and their interactions:

- The

GenerativeModelclass acts as the central point, coordinating the diffusion, sampling, and noise scheduling components. - Each category of components (diffusers, samplers, and noise schedulers) follows a design pattern with abstract base classes that define common interfaces.

- Concrete implementations inherit from these base classes and provide specific behaviors.

- The metrics system follows a similar design, with a base class

BaseMetricand specializations for each specific metric.

This design allows for system extensibility, facilitating the addition of new implementations without modifying existing code. For example, a user can create a new type of diffuser by inheriting from BaseDiffusion and implementing the required abstract methods.

The design’s flexibility is also reflected in how the different components can be combined. For example, any diffuser can be used with any sampler, as long as both correctly implement their respective interfaces.

2.4. Validation and Testing

The validation and testing process has been an essential component in the development of our software package, ensuring that all functionalities meet the established requirements and provide correct and consistent results.

Testing Strategy

- Unit Tests: Verify the correct behavior of isolated individual components.

- Integration Tests: Validate the correct interaction between different modules.

- System Tests: Check the functioning of the complete system in real usage scenarios.

- Performance Tests: Evaluate execution times and resource consumption.

Unit Tests

Unit tests have been implemented using the pytest framework, with a fixture-based approach to facilitate the setup of test scenarios. Specific tests have been developed for each system module:

test_diffusion.py: Verifies the behavior of the different diffusion processes.test_samplers.py: Checks the functioning of the sampling methods.test_noise.py: Validates the noise schedulers.test_metrics.py: Ensures the correct implementation of evaluation metrics.test_base.py: Tests the functionality of theGenerativeModelclass.

Unit tests cover both typical cases and edge and error scenarios, ensuring that all components properly handle exceptional situations.

Integration Tests

Integration tests, implemented in test_integration.py, verify the correct interaction between the different system modules. These tests simulate complete workflows, including:

- Model training and image generation.

- Image colorization and imputation.

- Class-conditioned generation.

- Model saving and loading.

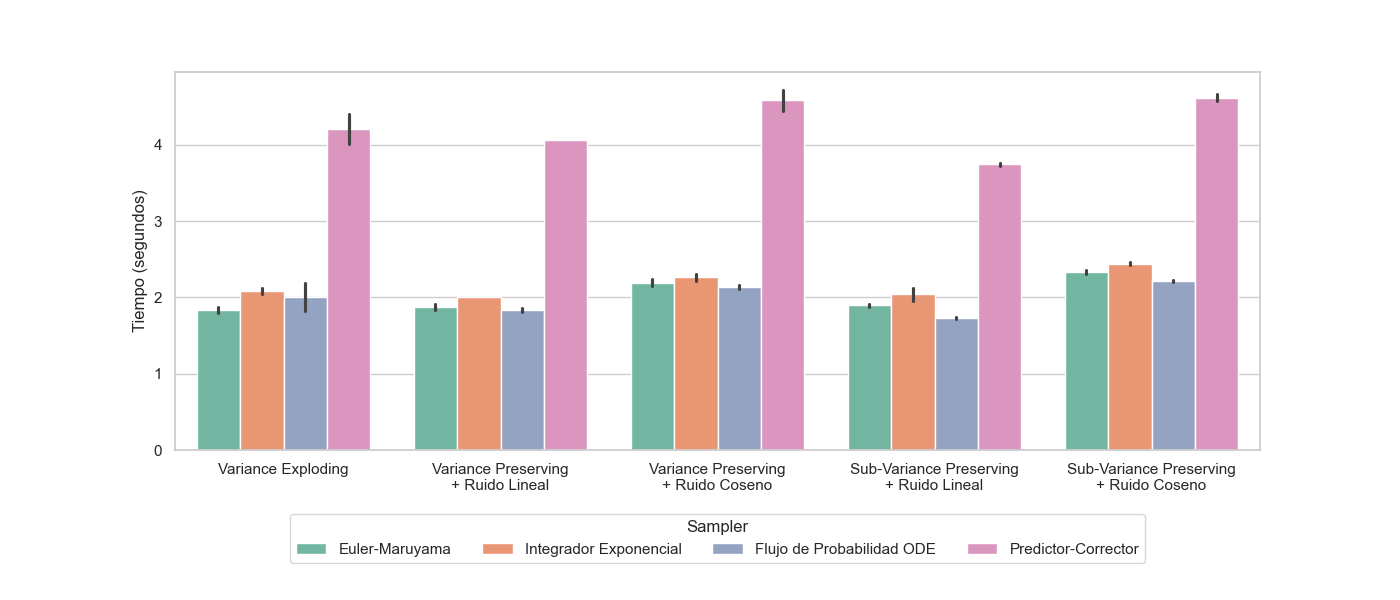

Performance Tests

Performance tests have been conducted to evaluate:

- Generation times for different configurations (see Figure Generation times per configuration and sampler).

- Memory consumption during training and generation.

- Scalability with the number of steps.

Exhaustive comparisons can be found in the appendix Exhaustive Comparisons.

Compatibility and Environments

The package’s compatibility with various hardware configurations has been verified:

- Systems with CUDA GPU.

- Systems with CPU only.

- Different operating systems (Windows and Linux).

2.5. Software Quality Assurance

Quality assurance has been a fundamental aspect in the development of our software package. We have implemented various practices and tools to ensure that the code is robust, maintainable, and compliant with industry standards.

Version Control

Development has been carried out using Git as a version control system, with a repository hosted on GitHub. This has facilitated:

- Detailed tracking of code changes.

- Coordinated collaborative development.

- Code review via pull requests.

The package source code can be found at the following link: https://github.com/HectorTablero/image-gen.

Coding Standards

The code has been developed following Google’s style guides for Python, ensuring consistency and readability.

Documentation

Documentation has been a priority throughout development, implemented at several levels:

- API Documentation: Automatically generated from docstrings in Google format.

- User Documentation: Includes manuals, tutorials, and usage examples.

- Example Notebooks: Demonstrate specific use cases with executable examples.

- Code Comments: Explain complex or non-intuitive sections.

Documentation is automatically generated using MkDocs and published via GitHub Pages, ensuring it is always up to date with the latest code version. It can be consulted here: https://hectortablero.github.io/image-gen/.

Additionally, a version of the documentation generated by Devin can be found at the following link: https://deepwiki.com/HectorTablero/image-gen.

Dependency Management

Project dependencies are managed via:

requirements.txtandpyproject.tomlfiles that specify dependencies and their versions.- Virtual environments to isolate development and avoid conflicts.

2.6. Other Considerations

The software developed in this project is distributed under the MIT license, which allows its use, modification, and redistribution without significant restrictions, provided the original attribution is maintained. However, no warranties of any kind are provided, and the developers assume no liability for its use.

The user is solely responsible for the use of the software and must ensure compliance with applicable laws in their jurisdiction. In particular, the use of the software to generate inappropriate, misleading, or third-party rights infringing content is strictly discouraged.

3. Results

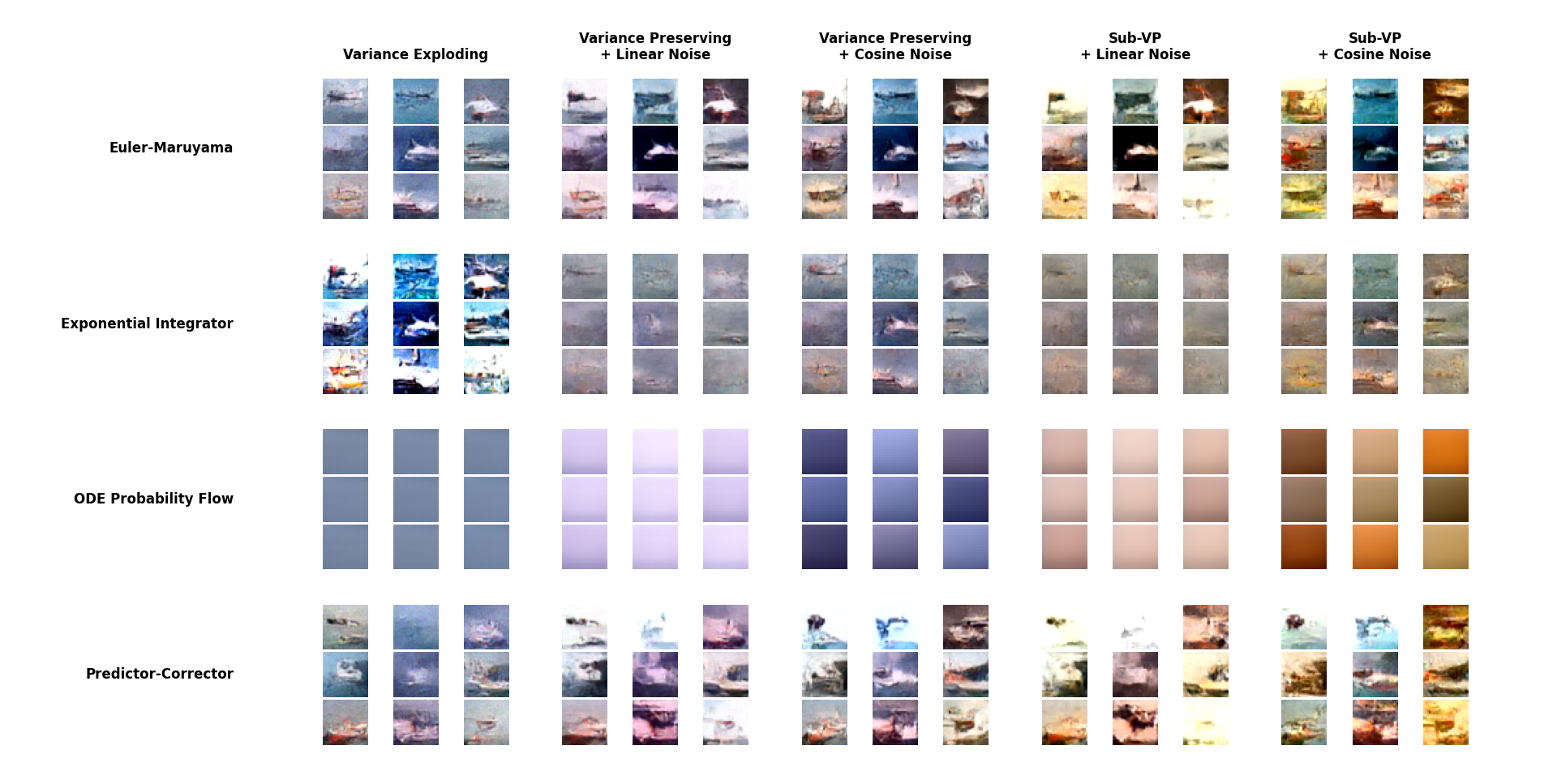

Below are results from image generation of models trained with images of ships from the CIFAR-10 dataset (Figure Generated images training with CIFAR-10, 100 epochs) and with images of fours from MNIST (Figure Generated images training with MNIST, 100 epochs):

- Sub-Variance Preserving is the diffuser that takes the longest to learn the data distribution. In this case, the model trained with only 100 epochs, so it hasn’t had enough time to learn to distinguish well between ships and the rest of the dataset.

- ODE is deterministic and converges to the mean of the learned data distribution, so it may not generate satisfactory results in some cases. In the case of MNIST, the generation is considerably better:

3.1. Usage Examples

In this section, we present various usage examples of our package that illustrate its capabilities and functionalities. These examples are available as Jupyter notebooks in the project repository, allowing users to reproduce them and experiment with different configurations.

Basic Image Generation

The following code shows how to initialize a generative model with default configuration and generate a set of images:

from image_gen.visualization import display_images

from image_gen import GenerativeModel

# Initialize model with Variance Exploding diffusion and Euler-Maruyama sampler

model = GenerativeModel(diffusion="ve", sampler="euler-maruyama")

model.train(dataset, epochs=25)

# Generate 4 images with 500 sampling steps

images = model.generate(num_samples=4, n_steps=500, seed=42)

# Visualize the generated imagesFigure: Basic Generation

Image Colorization

The following example shows how to use the model to colorize a grayscale image:

# Load a pre-trained model

model = GenerativeModel.load("saved_models/cifar10.pth")

# Create a grayscale image (averaging RGB channels)

color_image = model.generate(num_samples=1)[0]

gray_image = torch.mean(color_image, dim=0, keepdim=True).unsqueeze(0)

# Colorize the image

colorized = model.colorize(gray_image, n_steps=500)

# Visualize original in grayscale and colorized version

display_images(gray_image)

display_images(colorized)Figure: Image Colorization

Region Imputation

This example shows how to perform imputation of missing regions in an image:

# Load model

model = GenerativeModel.load("saved_models/cifar10.pth")

# Generate base image

base_image = model.generate(num_samples=1)

# Create mask (1 = region to generate, 0 = preserve)

mask = torch.zeros_like(base_image)

h, w = base_image.shape[2], base_image.shape[3]

mask[:, :, h//4:3*h//4, w//4:3*w//4] = 1 # Central rectangular mask

# Perform imputation

results = model.imputation(base_image, mask, n_steps=500)

# Visualize result

display_images(torch.cat([base_image, results], dim=0))Figure: Image Imputation

Additional examples can be consulted in the appendix Additional Examples, where more advanced use cases are presented.

3.2. Conclusions

The development of this diffusion model-based image generation package has allowed for the implementation and evaluation of different variants of these models, demonstrating their effectiveness in various generation tasks, from creating images from random noise to more specific tasks like colorization and imputation.

Achievements and Contributions

The main achievements and contributions of this project are:

- Implementation of three variants of diffusion processes (VE, VP, and Sub-VP), allowing for comparison of their performance in different scenarios.

- Development of four sampling methods with different quality and efficiency characteristics, providing flexibility for different use cases.

- Incorporation of controllable generation capabilities that significantly expand the package’s utility.

- Creation of a modular and extensible architecture that facilitates the incorporation of new components.

- Development of an interactive dashboard that significantly improves the package’s accessibility.

- Implementation of standard metrics for the quantitative evaluation of generated image quality.

Experimental results confirm that diffusion models represent a viable alternative to other generative techniques, with the additional advantage of more stable training and a solid theoretical framework based on stochastic differential equations.

Limitations

Despite the positive results, it is also important to recognize the current limitations of the package:

- Generation time remains considerably higher than other generative techniques like GANs, especially when more sophisticated sampling methods are used.

- The quality of generated images, although good, still does not reach the level of more specialized implementations like Stable Diffusion, which operate in compressed latent spaces.

- Training models for high-resolution images requires significant computational resources that have not been fully explored in this project.

Future Work

Based on the results obtained and the limitations identified, several lines of future work could improve and extend this package:

- Implementation of latent space diffusion, following the Stable Diffusion approach, to allow efficient generation of higher-resolution images.

- Incorporation of sampling acceleration techniques, such as DDIM and DPM-Solver, which could significantly reduce generation time.

- Extension to multimodal generation, particularly text-to-image generation.

- Development of semantic editing capabilities, allowing modification of specific attributes of generated images.

- Optimization of computational performance, especially for use on hardware with limited resources.

Final Considerations

This project demonstrates the potential of diffusion models as a versatile tool for various image generation tasks. The developed modular architecture provides a solid foundation for future developments and extensions, both in academic and practical applications.

We believe that the combination of a well-structured object-oriented design, thorough documentation, and interactive tools like the dashboard significantly contributes to the package’s accessibility and utility, facilitating its adoption by researchers and developers interested in image generation via diffusion models.

In conclusion, this project has not only fulfilled the initial objectives of implementing different variants of diffusion models and evaluating their performance but has also generated a useful and extensible software package that can serve as a foundation for future research and applications.

Bibliography

- [1]J. Sohl-Dickstein, E. Weiss, N. Maheswaranathan, S. Ganguli. "Deep unsupervised learning using nonequilibrium thermodynamics".Proceedings of the 32nd International Conference on Machine Learning (ICML). (2015).

- [2]Jonathan Ho, Ajay Jain, Pieter Abbeel. "Denoising Diffusion Probabilistic Models".Advances in Neural Information Processing Systems (NeurIPS). (2020). https://arxiv.org/abs/2006.11239

- [3]Yang Song, Jascha Sohl-Dickstein, Diederik P. Kingma, Abhishek Kumar, Stefano Ermon, Ben Poole. "Score-Based Generative Modeling through Stochastic Differential Equations".International Conference on Learning Representations (ICLR). (2021). https://arxiv.org/abs/2011.13456

- [4]R. Rombach, A. Blattmann, D. Lorenz, P. Esser, B. Ommer. "High-resolution image synthesis with latent diffusion models".Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2022).

- [5]Y. Song, Q. Yang, X. Lu, L. Chen. "Cgdiff: Controllable guided diffusion models for blind image colorization".Proceedings of the 31st ACM International Conference on Multimedia. (2023).

- [6]A. Lugmayr, M. Danelljan, A. Romero, F. Yu, R. Timofte, L. Van Gool. "Repaint: Inpainting using denoising diffusion probabilistic models".Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2022).

- [7]C. Saharia, W. Chan, H. Chang, C. Lee, J. Ho, T. Salimans, D. J. Fleet, M. Norouzi. "Palette: Image-to-image diffusion models".ACM SIGGRAPH 2022 Conference Proceedings. (2022).

- [8]Prafulla Dhariwal, Alex Nichol. "Diffusion Models Beat GANs on Image Synthesis".Advances in Neural Information Processing Systems (NeurIPS). (2021). https://arxiv.org/abs/2105.05233

- [9]A. Ramesh, P. Dhariwal, A. Nichol, C. Chu, M. Chen. "Hierarchical text-conditional image generation with CLIP latents".arXiv preprint arXiv:2204.06125. (2022). https://arxiv.org/abs/2204.06125

- [10]C. Saharia, W. Chan, S. Saxena, L. Li, J. Whang, E. L. Denton, K. Ghasemipour, R. Gontijo Lopes, B. Karagol Ayan, T. Salimans, J. Ho, D. J. Fleet, M. Norouzi. "Photorealistic text-to-image diffusion models with deep language understanding".Advances in Neural Information Processing Systems (NeurIPS). (2022).

- [11]Google. "Google style guides". (2022).

- [12]Cognition AI. "Introducing devin, the first ai software engineer". (2024).

- [13]J. Song, C. Meng, S. Ermon. "Denoising diffusion implicit models".arXiv preprint arXiv:2010.02502. (2020). https://arxiv.org/abs/2010.02502

- [14]C. Lu, Y. Zhou, Z. Liu, D. He, Y. Chen, C.-J. Hsieh. "Dpm-solver: A fast ode solver for diffusion probabilistic models".arXiv preprint arXiv:2206.00927. (2022). https://arxiv.org/abs/2206.00927